In March 2016, an AI-based computer program, AlphaGo, beat a world champion, Lee Sedol, in the ancient game of Go. This was a milestone of sorts, and more eventful than the defeat of Gary Kasporov at the hands of IBM’s DeepBlue in 1997. Go is a lot more complex than Chess, with 10130 more possible moves vs chess[i]. This means that a rule-based system would have difficulty scanning all possible responses for an optimal one. AlphaGo was based on a form of “Artificial Intelligence”[ii].

Since then, businesses have rushed to claim their expertise in artificial intelligence (AI) or the advances they expect to see in the near future. In fact, the mention of the phrase “artificial intelligence” during quarterly earnings calls has grown three times[iii] over the last year. A number of businesses connected to AI have seen their stocks dramatically increase in value (Nvidia and Advanced Micro Devices are a case in point).

Is this the fourth industrial revolution?

Deep learning is the ability of a computer program to gain an abstract level of knowledge from churning through a lot of data and eventually apply it in new situations. The computers are not explicitly programmed to follow a rule – the program can use its abstract understanding to take decisions when faced with new situations. While AI concepts have been around since the middle of last century, the easy availability of massive amounts of data and computing power has enabled resurgence in this field again. Andrew Ng’s famous paper in deep learning describes the setup that puts some of these numbers in perspective. To be able to correctly identify a cat in YouTube videos, it took 10million images, 1000 machines (16,000 cores) and 3 days to train the algorithm.

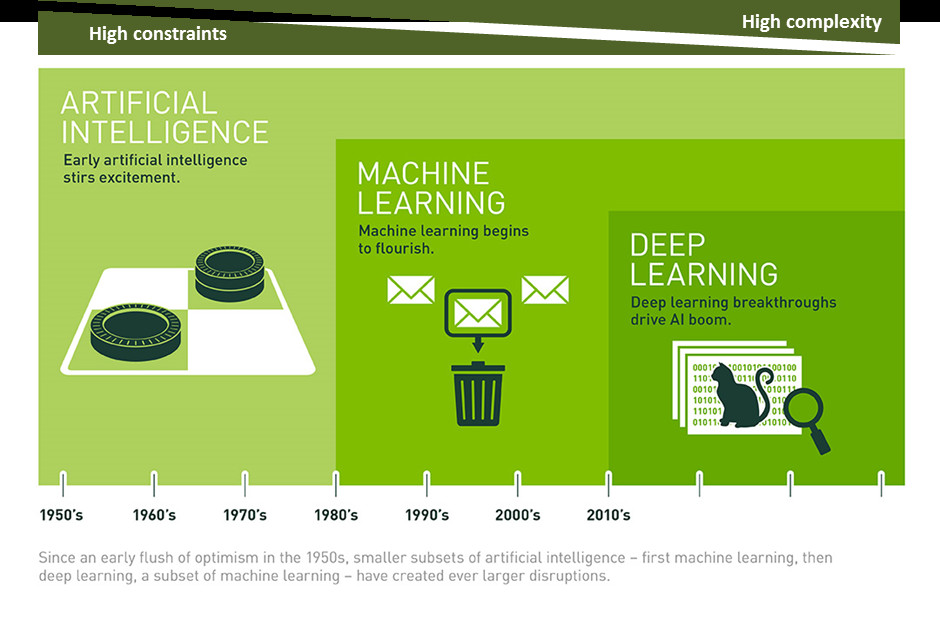

Exhibit 1: Timeline of Artificial Intelligence Technologies (Source: NVIDIA)

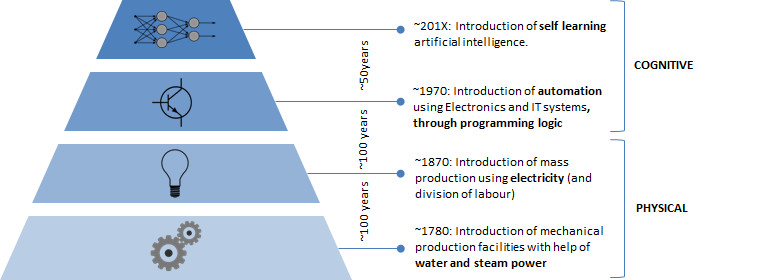

While the first two industrial revolutions enhanced our physical prowess (through mechanical energy (water/steam) or electrical energy), the third industrial revolution was already about enhancing our cognitive abilities (IT/Computers: by programming rules-based logic into machinery). This fourth industrial revolution further enhances our cognitive abilities (AI: by removing the rules based logic to enable a certain level of understanding into machinery[iv]).

The shared benefit of each of the industrial revolutions is significantly improved productivity. The idea that a computer program does not need explicit programming and can learn by itself is non-trivial, when it comes to scaling the productivity benefits.

Exhibit 2: AI: The fourth Industrial Revolution

What could artificial intelligence accomplish?

There is a long list of areas where AI can prove useful, no wonder it appears every CEO wants to be AI-ready.

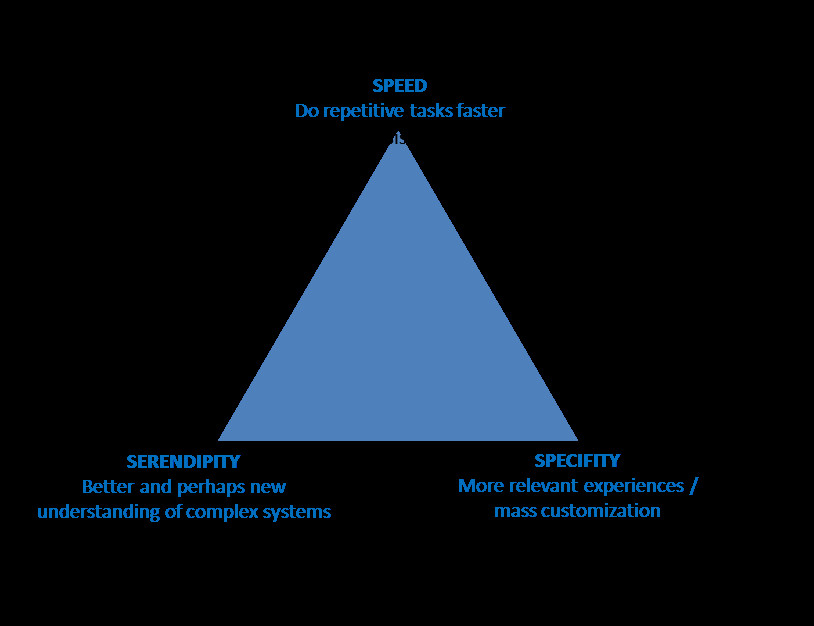

However, I believe we can categorise that potential into the following broad use-cases:

- Speed: Productivity would be a first order benefit of AI. Because artificial intelligence-based algorithms self-learn, less human effort is needed for automating many tasks leading to significant productivity gains in speeding up routine tasks, such as customer support, transliteration, driving, reading medical reports/ scans.

- Specificity: The self-learning aspect would mean the algorithm can provide individually relevant experiences to each user. Imagine a time Amazon could predict (based on our shopping behaviour) what and when we need to buy, even before we realise we want to buy it.

- Serendipity: Finally, given the very scalable computer capacity now available to AI algorithms, a large number of simulations can be run to model very complex systems and finally understand them in ways that we haven’t explored, leading to better understanding. A real example of that was found in AlphaGo’s gameplay that defied 1000s of years of wisdom[v] and made moves that eventually led to it winning against a champion Go player. Another example of such a complex system where our understanding is limited is short term price movements in the financial markets, where multiple participants of varying risk appetites and behavioural biases influence the price on a daily basis.

Exhibit 3: The three types of benefits of AI

Along with the promise come some risks. These risks have been discussed by various observers.

- The GIGO problem (Garbage in Garbage Out). An AI system would be only as good as the data on which it is trained. For example, an algorithm used for shortlisting candidates for an interview will pick up on any historical bias and apply it going forward.

- The stupidity argument: Some may not agree with the “intelligence” part in the term AI, given it requires tons of data before it can start producing useful results.

- Black box problem: Deep neural networks can have 100s if not 1000s of layers. The greater the layers, the greater the levels of abstraction that it can pick on, leading to opacity of is operation. Because they are learning networks and not explicitly programmed, with their own internal representation of the problem they are trying to solve, how they make decisions is not known. Would you be willing to let an autonomous car drive you from Los Angeles to San Francisco, when you (or the experts) have very little clue into how the car makes the thousands of decisions it needs to make over the journey?

- Extreme productivity = massive job losses: When a tool enhances productivity, we can do more with less human involvement. AI is unique in that it will be the first time we can (almost) do away with any human involvement, and as such it would be hugely scalable as well as impactful. The magnitude and the speed of job losses created by this fourth industrial revolution could be unprecedented.

- Risk of general intelligence: Lastly, there is the threat of artificial general intelligence, or “self-aware” artificial intelligence. Most AI agents today work in narrow domains and have no agenda but to maximise the utility function. What if an AI programmed for increasing farm output starts to operate outside of the farm and starts taking over the planet to grow crops? What if it does everything it has to in order to achieve that goal? Elon Musk is worried that this is where the current efforts of AI could lead us to, so he is working on OpenAI, a non-profit research company that wants to make safe general intelligence. Stephen Hawking has warned that the creation of powerful artificial intelligence will be “either the best, or the worst thing, ever to happen to humanity”.

The final obvious question then is what can we do to make sure that AI is the “best thing ever to happen to humanity” and not the worst?

- Data is key: It goes without saying that the quality of data as well as the bias of the data needs to be actively managed in order to make sure we don’t unintentionally program biases.

- Stupid but simple: I wouldn’t dismiss the neural networks of today that use a lot of data to learn as stupid. Of course their representation of knowledge is not optimal (in the form of strength of branches connecting an artificial neural network) and their understanding is slow (takes time to iterate), but for now, this approach works as we have no scarcity of data. However, the next generation of algorithms will surely improve efficiency regarding size of the data set. Many teams are working on these approaches including: Numenta, Geometric Intelligence, Klmera and Vicarious.

- A matter of learning a new language: The current lack of insight into how an AI makes decisions is due to its different representation of knowledge. But could a matrix of weights be translatable to language as we understand it? I don’t know. Maybe we can train simple AI (where we understand the logic and can supervise learning) to start translating their rationality to us. If we extend this logic enough, could we teach the AI to translate its representation into how we represent knowledge?

- An AI tax: Bill Gates recently made this seemingly controversial suggestion[vi], but is there merit in it? I think it’s a brilliant suggestion, at least for a transition period until the workforce can be retrained. The issue with productivity gains is that they accrue to capital owners. Taxes on AI could be the source of funds to retrain the displaced workforce.

- Threat of general intelligence: I’m a bit relaxed about this as most AI we know today is some form of complex correlation exercise, applicable in very narrow domains. Is there a risk that all these narrow AI collaborate to form a super AI that would destroy humanity? I don’t know, but I do know that we are far away from such a point (or indeed doubt if we will ever get to that point). There are groups lobbying for a “master off” button of sorts into the AI if such a day were to arrive. I believe some sort of a standard body should emerge over time which could enable “good practices”, just as exist in coding!

I remain optimistic and look forward to the coming fourth industrial revolution!

In the long term, from an investment point of view, the resurgence of Artificial intelligence will lead to growing need for computing power, which benefits semiconductor and equipment makers. The productivity benefits resulting from use of AI would get split between the end user (consumers and businesses) and the software vendors.

Disclaimer: This is a discussion of broad technology trends and not investment advice. Any investment decisions made are your own and at your own risk.

All views, opinions, and statements are my own.

[i] Go has 250150 moves and Chess has 3580 moves. To put the scale of these numbers in perspective, the entire universe (as much as we know) has ~1080 atoms

[ii] In particular, a combination of Monte Carlo (game) tree search and deep (convolutional) neural networks for those interested

[iii] Document Search on Sentieo Plotter for Transcripts for “AI or Artificial Intelligence or machine learning or Deep learning”

[iv] There are some extending the AI progress towards Cybernetics – potentially interfacing a human mind with an artificial intelligent brain that one could use for certain kinds of compute / memory / search intensive tasks – but I am not ready to make that leap of faith yet, given our limited understanding of the human brain.

[v] “Google’s AI won the game Go by defying millennia of basic human instinct”, Quartz March 25 2016

[vi] “Robots that take people’s jobs should pay taxes, says Bill Gates”, The Telegraph February 20 2017