This blog first appeared on the equitiesforum.com, here.

Are today’s technology titans exploiting their market position without any attention to broader stakeholder welfare? Essentially, this is the implicit question being discussed in most media today.

Given the recent news flow, it certainly feels like technology has had a rough start to the year. In the past few weeks, they have seen a marked underperformance given negative news flow: Zuckerberg in Washington, Uber‘s self-driving car crash, Tesla recall and President Trump’s tweets against Amazon. Although Google have managed to avoid this recent limelight, they were (not so long ago) slammed a EUR 2.4bn fine for allegedly breaching anti-trust rules.

In my view, these allegations fall in three categories: 1) anti-competitive practices; 2) ownership of content; 3) compromised user privacy. I will tackle the latter two in this post.

Teething problems or poor incentives?

Before looking at the specific situation today, it’s worth taking a step back. This is not something that is happening for the first time.

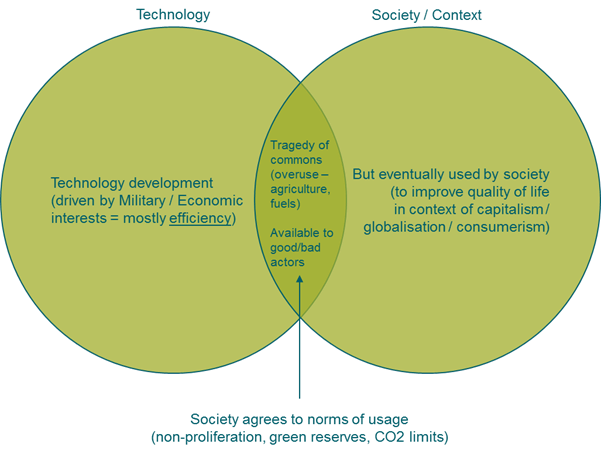

Technology, very broadly defined, is a tool that enhances productivity. Its development is often undertaken with that sole criterion in mind. This isolation from “real-life” helps innovation and progress. But when used broadly and introduced into the real world, this highly efficient tool faces two major issues a) the tragedy of the commons / prisoner’s dilemma[i] and b) availability to good and bad actors.

This is where we, as a society, agree to ‘norms of usage’. Nuclear technology is great for producing cleaner energy, but it can equally be used as nuclear warfare. Internal combustion engines are great to improve our mobility. As individuals, we have every incentive to make the most use of this technology to improve our mobility/productivity, but society, over time, agrees to norms of usage (such as CO2 emissions).

The internet is no different. It has given us a lot of value (in terms of content and tools) for free, in exchange for advertisements. At the same time, this has been abused by several bad actors – fake content and compromised user privacy are two issues arising from that abuse. Does that mean that the internet and today’s technology titans, riding its coat-tails are all bad? Or does that mean it may be high time to agree to ‘norms of usage’ of this technology? We can also call it regulation.

Part of the reason the bad actors got to where they are today is because of a policy of neutrality practised by these platforms. Why did they do so? A law from 1996 (Section 230, 1996 Communications Decency Act – “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”) that shelters internet intermediaries from liability for the content their users post. While this made sense in the early days of the internet to ensure innovation and growth, it makes little sense today for platforms, such as Facebook, to shirk responsibility for user content that they store.

Will technology come to its own rescue?

Once the poor incentives (for platform neutrality) are adjusted, most likely, yes.

Facebook has repeatedly said that 99% of terrorist content is taken down (supported by Artificial Intelligence tools[ii]) before they are flagged by users. Other platforms are equally capable of using AI tools to flag objectionable content. Such automated response to moderating or taking down ‘bad’ quality content should surely help rebuild trust in these platforms again. This type of regulation (ownership of content) is increasingly being accepted by the likes of Facebook – with Mark Zuckerberg remarking it is ultimately responsible for content. Facebook plan to double the number of people working on cybersecurity and content moderation to 20,000 employees in 2018.

With respect to user privacy and identity, I believe there are two fixes. The first fix is behavioural. Increased scrutiny will make them more responsible. If in the past, Facebook did not restrict access to user data by rogue apps, after the Cambridge Analytica scandal, it will need to be more cautious of such lapses. This means giving users more transparency and control about how, and with whom, their information gets shared. The second fix, in my view, will come from technology itself – Blockchains[iii], and more specifically zero-knowledge proof Blockchains. In layman terms, this cryptography technology enables proving something (in this case user identity) without revealing any information that goes into the proof. This ensures full anonymization of user data, with no link to sensitive or identifiable user data. Combining them with smart contracts (a feature in Ethereum Blockchain) could give users full control over who can access what information. A central authority, such as a government, could issue these. For example, the World Food Program’s (WFP) Building blocks project already uses zero-knowledge Blockchain to dispense aid to Syrian refugees.

Regulation is a double-edged sword

At the end of the day, the regulators will have a difficult job. On the one hand, they want to hold platforms responsible for the content and be responsible with user data thereby creating norms of usage. On the other hand, regulation typically raises barriers to entry. It will make it difficult for smaller firms or new entrants to satisfy those regulatory requirements and possibly restrict their access to data that the behemoths, such as Facebook, already have. This could entrench the market position of the technology titans that it is trying to regulate. If data portability neutralizes platform power, it also exposes the data to abuse by bad actors.

There will be a tricky balance to strike between: safeguarding user abuse and limiting the platform’s market power.

Disclaimer: This is a discussion of broad technology trends and not investment advice. Any investment decisions made are your own and at your own risk. All views, opinions, and statements are my own.

[i] Prisoner’s dilemma is a paradox in decision analysis in which two individuals acting in their own self-interest pursue a course of action that does not result in the ideal outcome. The typical set up is where both parties choose to protect themselves at the expense of the other participant. As a result of following a purely logical thought process, both participants find themselves in a worse state than if they had cooperated with each other in the decision-making process

[ii] If I haven’t already driven home the point about technology’s dual nature, it is worth re-noting that these AI tools that can flag objectionable content can also be used (by bad actors) to create more fake content – text as well as videos.

[iii] Blockchain is a distributed ledger where transactions/activity/information can be recorded chronologically and publicly. Given the use of cryptography to encode the information and “chaining”, it is almost impossible to alter the data retroactively. Interestingly, Blockchain technology is also the biggest threat to established digital platforms due to its ability to democratize “trust” (through decentralizing the record).